As organisations look to scale AI, on-premises infrastructure holds the key to achieving better business value.

By Tony Bartlett, Director of Data Centre Compute, Dell Technologies South Africa

AI is migrating from computers and phones to robots, self-driving cars and pretty much any digital space imaginable. Even NVIDIA CEO Jensen Huang called this out at the company’s recent GPU Technology Conference (GTC) conference.

Generative AI is growing rapidly, with 75% of knowledge workers using it to create content like sales collateral or automate coding, says Accenture. According to a survey by Boston Consulting Group, 90% of South African employees using GenAI for work believe it has saved them time, with another 42% reporting confidence in its impact on their work. Around 87% of local respondents reported an improved quality of work, saving them time to focus on strategic work and reducing time spent on administrative tasks.

The key to effective AI outcomes is a centralised AI strategy that takes into account various technical and operational factors. Different use cases will require different models and processes, as well as access to high-quality data to help maximise productivity.

Increased productivity isn’t the only consideration. As organisations put their AI projects through their paces, they must also respect their budgets and protect their data. And as with all emerging technologies, AI presents implementation hurdles.

AI deployment options

Chief among these are large language models (LLMs), which require training, inferencing, fine-tuning, and optimisation techniques for grounding GenAI. Some organisations struggle with AI skill gaps, making it difficult to effectively use such technologies.

To ease these burdens, some organisations choose to consume LLMs managed by public cloud providers, or to run LLMs of their choosing on those third-party platforms.

As attractive as the public cloud is for shrinking launch timelines, it also features trade-offs. Variable costs and higher latency as well as data security and sovereignty concerns can make running AI workloads there unappealing or even untenable.

AI workloads also present more variables for IT decision makers to consider. As attractive as more choice is, it can also compound complexity.

Accordingly, running infrastructure (including compute, storage and GPUs) on premises gives organisations the ability to control all aspects of deployment. For instance, on-premises infrastructure offers great value for deploying large, predictable AI workloads, with closer proximity to where the data is processed, enabling organisations to respect their budgets.

Strong organisational controls are essential for safeguarding AI models, inputs, and outputs – which may include sensitive IP – from malicious actors and data leakage.

To comply with local regulations, some organisations must prioritise data security and data sovereignty mandates requiring that data remains in specific geographic locales. By running AI workloads where the data exists, organisations can remain compliant while also avoiding duplicate transfers between systems and locations.

To that end, many organisations today are customising open-source LLMs using retrieval-augmented generation (RAG). With RAG, organisations can tailor chatbots with prompt responses to specific use cases.

Moreover, as LLMs continue to downsize while maintaining high performance and reliability, more models are running on portable computers such as AI PCs or workstations on-premises and at the edge.

These factors underscore why 73% of organisations prefer to self-deploy LLMs based on infrastructure operating at data centres, devices, and edge locations, according to Enterprise Strategy Group (ESG).

On-premises AI can be more cost effective

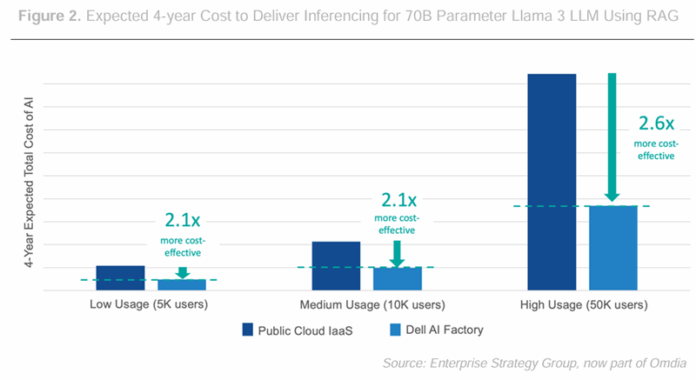

Empirical data comparing the value of on-premises deployments to the public cloud are scarce. However, ESG’s recent study compared the expected costs of delivering inferencing for a text-based chatbot fuelled by a 70B parameter open-source LLM running RAG on-premises with a comparable public cloud solution from Amazon Web Services.

The analysis, which estimated the cost to support infrastructure and system administration for between 5,000 to 50,000 users over a four-year period, found that running the workload on-premises was as much as 62% more cost-effective at supporting inferencing than the public cloud.

Moreover, the same on-premises implementation was as much as 75% more cost-effective than running an API-based service from OpenAI. Of course, every organisation’s savings will vary per use case and modelling scenario.

The on-premises solution featured in the ESG study comprised the Dell AI Factory, a modern approach designed to help organisations scale their AI solutions and build better business outcomes.

The Dell AI Factory blends Dell infrastructure and professional services, with hooks into an open ecosystem of software vendors and other partners who can support your AI use cases today and in the future.

Articulating a centralised strategy for this new era of AI everywhere is great, but the work doesn’t stop there. The Dell AI Factory can help guide you on your AI journey.

Article Provided